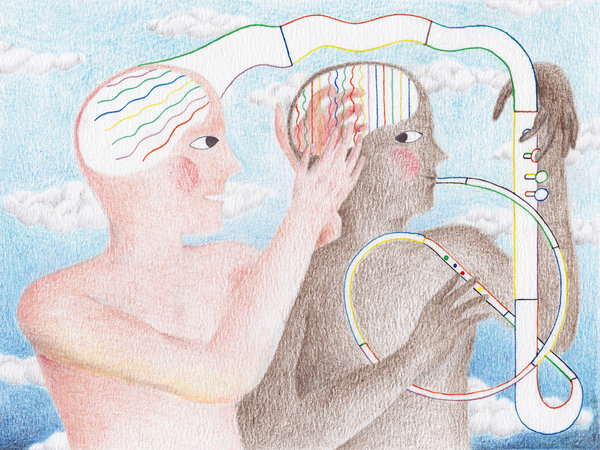

Humans have an impressive ability to take on other viewpoints – it’s crucial for a social species like ours. So why are some of us better at it than others?

Picture two friends, Sally and Anne, having a drink in a bar. While Sally is in the bathroom, Anne decides to buy another round, but she notices that Sally has left her phone on the table. So no one can steal it, Anne puts the phone into her friend’s bag before heading to the bar. When Sally returns, where will she expect to see her phone?

If you said she would look at the table where she left it, congratulations! You have a theory of mind – the ability to understand that another person may have knowledge, ideas and beliefs that differ from your own, or from reality.

If that sounds like nothing out of the ordinary, perhaps it’s because we usually take it for granted. Yet it involves doing something no other animal can do to the same extent: temporarily setting aside our own ideas and beliefs about the world – that the phone is in the bag, in this case – in order to take on an alternative world view.

This process, also known as “mentalising”, not only lets us see that someone else can believe something that isn’t true, but also lets us predict other people’s behaviour, tell lies, and spot deceit by others. Theory of mind is a necessary ingredient in the arts and religion – after all, a belief in the spirit world requires us to conceive of minds that aren’t present – and it may even determine the number of friends we have.

Yet our understanding of this crucial aspect of our social intelligence is in flux. New ways of investigating and analysing it are challenging some long-held beliefs. As the dust settles, we are getting glimpses of how this ability develops, and why some of us are better at it than others. Theory of mind has “enormous cultural implications”, says Robin Dunbar, an evolutionary anthropologist at the University of Oxford. “It allows you to look beyond the world as we physically see it, and imagine how it might be different.”

The first ideas about theory of mind emerged in the 1970s, when it was discovered that at around the age of 4, children make a dramatic cognitive leap. The standard way to test a child’s theory of mind is called the Sally-Anne test, and it involves acting out the chain of events described earlier, only with puppets and a missing ball.

When asked, “When Sally returns, where will she look for the ball?”, most 3-year-olds say with confidence that she’ll look in the new spot, where Anne has placed it. The child knows the ball’s location, so they cannot conceive that Sally would think it was anywhere else.

Baby change

But around the age of 4, that changes. Most 4 and 5-year olds realise that Sally will expect the ball to be just where she left it.

For over two decades that was the dogma, but more recently those ideas have been shaken. The first challenge came in 2005, when it was reported in Science (vol 308, p 255) that theory of mind seemed to be present in babies just 15 months old.

Such young children cannot answer questions about where they expect Sally to look for the ball, but you can tell what they’re thinking by having Sally look in different places and noting how long they stare: babies look for longer at things they find surprising.

When Sally searched for a toy in a place she should not have expected to find it, the babies did stare for longer. In other words, babies barely past their first birthdays seemed to understand that people can have false beliefs. More remarkable still, similar findings were reported in 2010 for 7-month-old infants (Science, vol 330, p 1830).

Some say that since theory of mind seems to be present in infants, it must be present in young children as well. Something about the design of the classic Sally-Anne test, these critics argue, must be confusing 3-year-olds.

Yet there’s another possibility: perhaps we gain theory of mind twice. From a very young age we possess a basic, or implicit, form of mentalising, so this theory goes, and then around age 4, we develop a more sophisticated version. The implicit system is automatic but limited in its scope; the explicit system, which allows for a more refined understanding of other people’s mental states, is what you need to pass the Sally-Anne test.

If you think that explanation sounds complicated, you’re not alone. “The key problem is explaining why you would bother acquiring the same concept twice,” says Rebecca Saxe, a cognitive scientist at Massachusetts Institute of Technology.

Yet there are other mental skills that develop twice. Take number theory. Long before they can count, infants have an ability to gauge rough quantities; they can distinguish, for instance, between a general sense of “threeness” and “fourness”. Eventually, though, they do learn to count and multiply and so on, although the innate system still hums beneath the surface. Our decision-making ability, too, may develop twice. We seem to have an automatic and intuitive system for making gut decisions, and a second system that is slower and more explicit.

Double-think

So perhaps we also have a dual system for thinking about thoughts, says Ian Apperly, a cognitive scientist at the University of Birmingham, UK. “There might be two kinds of processes, on the one hand for speed and efficiency, and on the other hand for flexibility,” he argues (Psychological Review, vol 116, p 953).

Apperly has found evidence that we still possess the fast implicit system as adults. People were asked to study pictures showing a man looking at dots on a wall; sometimes the man could see all the dots, sometimes not. When asked how many dots there were, volunteers were slower and less accurate if the man could see fewer dots than they could. Even when trying not to take the man’s perspective into account, they couldn’t help but do so, says Apperly. “That’s a strong indication of an automatic process,” he says – in other words, an implicit system working at an unconscious level.

If this theory is true, it suggests we should pay attention to our gut feelings about people’s state of mind, says Apperly. Imagine surprising an intruder in your home. The implicit system might help you make fast decisions about what they see and know, while the explicit system could help you to make more calculated judgments about their motives. “Which system is better depends on whether you have time to make the more sophisticated judgement,” says Apperly.

The idea that we have a two-tier theory of mind is gaining ground. Further support comes from a study of people with autism, a group known to have difficulty with social skills, who are often said to lack theory of mind. In fact, tests on a group of high-functioning people with Asperger’s syndrome, a form of autism, showed they had the explicit system, yet they failed at non-verbal tests of the kind that reveal implicit theory of mind in babies (Science, vol 325, p 883). So people with autism can learn explicit mentalising skills, even without the implicit system, although the process remains “a little bit cumbersome” says Uta Frith, a cognitive scientist at University College London, who led the work. The finding suggests that the capacity to understand others should not be so easily written off in those with autism. “They can handle it when they have time to think about it,” says Frith.

If theory of mind is not an all-or-nothing quality, does that help explain why some of us seem to be better than others at putting ourselves into other people’s shoes? “Clearly people vary,” points out Apperly. “If you think of all your colleagues and friends, some are socially more or less capable.”

Unfortunately, that is not reflected in the Sally-Anne test, the mainstay of theory of mind research for the past four decades. Nearly everyone over the age of 5 can pass it standing on their head.

To get the measure of the variation in people’s abilities, different approaches are needed. One is called the director task; based on a similar idea to Apperly’s dot pictures, this involves people moving objects around on a grid while taking into account the viewpoint of an observer. This test reveals how children and adolescents improve progressively as they mature, only reaching a plateau in their 20s.

How does that timing square with the fact that the implicit system – which the director test hinges on – is supposed to emerge in early infancy? Sarah-Jayne Blakemore, a cognitive neuroscientist at University College London who works with Apperly, has an answer. What improves, she reckons, is not theory of mind per se but how we apply it in social situations using cognitive skills such as planning, attention and problem-solving, which keep developing during adolescence. “It’s the way we use that information when we make decisions,” she says.

So teenagers can blame their reputation for being self-centred on the fact they are still developing their theory of mind. The good news for parents is that most adolescents will learn how to put themselves in others’ shoes eventually. “You improve your skills by experiencing social scenarios,” says Frith.

It is also possible to test people’s explicit mentalising abilities by asking them convoluted “who-thought-what-about-whom” questions. After all, we can do better than realising that our friend mistakenly thinks her phone will be on the table. If such a construct represents “second-order” theory of mind, most of us can understand a fourth-order sentence like: “John said that Michael thinks that Anne knows that Sally thinks her phone will be on the table.”

In fact Dunbar’s team has shown that such a concept would be the limit of about 20 per cent of the general population (British Journal of Psychology, vol 89, p 191). Sixty per cent of us can manage fifth-order theory of mind and the top 20 per cent can reach the heights of sixth order.

As well as letting us keep track of our complex social lives, this kind of mentalising is crucial for our appreciation of works of fiction. Shakespeare’s genius, according to Dunbar, was to make his audience work at the edge of their ability, tracking multiple mind states. In Othello, for instance, the audience has to understand that Iago wants jealous Othello to mistakenly think that his wife Desdemona loves Cassio. “He’s able to lift the audience to his limits,” says Dunbar.

So why do some of us operate at the Bard’s level while others are less socially capable? Dunbar argues it’s all down to the size of our brains.

According to one theory, during human evolution the prime driver of our expanding brains was the growing size of our social groups, with the resulting need to keep track of all those relatives, rivals and allies. Dunbar’s team has shown that among monkeys and apes, those living in bigger groups have a larger prefrontal cortex. This is the outermost section of the brain covering roughly the front third of our heads, where a lot of higher thought processes go on.

Last year, Dunbar applied that theory to a single primate species: us. His team got 40 people to fill in a questionnaire about the number of friends they had, and then imaged their brains in an MRI scanner. Those with the biggest social networks had a larger region of the prefrontal cortex tucked behind the eye sockets. They also scored better on theory of mind tests (Proceedings of the Royal Society B, vol 279, p 2157). “The size of the bits of prefrontal cortex involved in mentalising determine your mentalising competencies,” says Dunbar. “And your mentalising competencies then determine the number of friends you have.” It’s a bold claim, and one that has not convinced everyone in the field. After all, correlation does not prove causation. Perhaps having lots of friends makes this part of the brain grow bigger, rather than the other way round, or perhaps a large social network is a sign of more general intelligence.

Lying robots

What’s more, there seem to be several parts of the brain involved in mentalising – perhaps unsurprisingly for such a complex ability. In fact, so many brain areas have been implicated that scientists now talk about the theory of mind “network” rather than a single region.

A type of imaging called fMRI scanning, which can reveal which parts of the brain “light up” for specific mental functions, strongly implicates a region called the right temporoparietal junction, located towards the rear of the brain, as being crucial for theory of mind. In addition, people with damage to this region tend to fail the Sally-Anne test.

Other evidence has emerged for the involvement of the right temporoparietal junction. When Rebecca Saxe temporarily disabled that part of the brain in healthy volunteers, by holding a magnet above the skull, they did worse at tests that involved considering others’ beliefs while making moral judgments (PNAS, vol 107, p 6753).

Despite the explosion of research in this area in recent years, there is still lots to learn about this nifty piece of mental machinery. As our understanding grows, it is not just our own skills that stand to improve. If we can figure out how to give mentalising powers to computers and robots, they could become a lot more sophisticated. “Part of the process of socialising robots might draw upon things we’re learning from how people think about people,” Apperly says.

For instance, programmers at the Georgia Institute of Technology in Atlanta have developed robots that can deceive each other and leave behind false clues in a high-tech game of hide-and-seek. Such projects may ultimately lead to robots that can figure out the thoughts and intentions of people.

For now, though, the remarkable ability to thoroughly worm our way into someone else’s head exists only in the greatest computer of all – the human brain.

(Article by Kirsten Weir, who is a science writer based in Minneapolis).

http://beyondmusing.wordpress.com/2013/06/07/mind-reading-how-we-get-inside-other-peoples-heads/