Science is painting a dramatic picture of how childhood neglect damages developing brains, so stunting them that neglect might be likened to physically violent abuse.

The latest addition to this research narrative comes from a study of mice placed in isolation early in their lives, an experiment that, on its surface, might seem redundant: After all, we already know that neglect is bad for humans, much less mice.

But they key to the study is in the details. The researchers found striking abnormalities in tissues that transmit electrical messages across the brain, suggesting a specific mechanism for some of the dysfunctions seen in neglected human children.

“This is very strong evidence that changes in myelin cause some of the behavioral problems caused by isolation,” said neurologist Gabriel Corfas of Harvard Medical School, a co-author of the new study, released Sept. 13 in Science.

Corfas and his team, led by fellow Harvard Med neuroscientist Manabu Makinodan, put 21-day-old mice in isolation for two weeks, then returned them to their colonies. When the mice reached adolescence, the researchers compared their brains and behavior to mice who hadn’t been isolated.

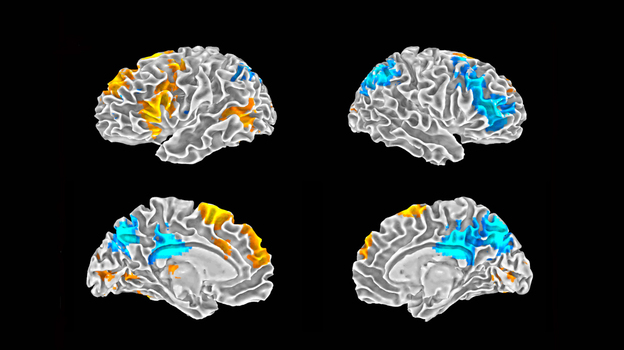

The isolated mice were antisocial, with striking deficits in memory. Their myelin, a cell layer that forms around neuronal networks like insulation around wires, was unusually thin, especially in the prefrontal cortex, a brain region central to cognition and personality.

Similar patterns of behavior have been seen, again and again, in children raised in orphanages or neglected by parents, as have changes to a variety of brain regions, including the prefrontal cortex. The myelin deficiencies identified by Corfas and Makinodan may underlie these defects.

“This is incredibly important data, because it gives us the neural mechanisms associated with the deleterious changes in the brain” that arise from neglect, said Nathan Fox, a cognitive neuroscientist at the University of Maryland.

Fox was not involved in the new study, but is part of a research group working on a long-term study of childhood neglect that is scientifically striking and poignantly tragic. Led by Harvard Medical School pediatricians Charles Nelson and Margaret Sheridan, the project has tracked for the last 12 years children who started their lives in an orphanage in Bucharest, Romania, a country infamous for the spartan, impersonal conditions of its orphanages.

Among children who spent their first two years in the orphanage, the researchers observed high levels developmental problems, cognitive deficits, mental illness, and significant reductions in brain size. When the researchers measured the sheer amount of electrical activity generated by the brains of children who’d been isolated as toddlers, “it was like you’d had a rheostat, a dimmer, and dimmed down the amount of energy in these institutionalized children,” said Fox.

These problems persisted even when toddlers were later adopted, suggesting a crucial importance for those early years in setting a life’s neurological trajectory. “There’s a sensitive period for which, if a child is taken out of an institution, the effects appear to be remediated, and after which remediation is very, very difficult,” Fox said. The same pattern was observed in Corfas and Makinodan’s mice.

One phenomenon not studied in the mice, but regularly found in people neglected as children, are problems with stress: mood disorders, anxiety, and general dysfunction in a body’s stress responses.

Those mechanisms have been studied in another animal, the rhesus monkey. While deprivation studies on non-human primates — and in particular chimpanzees — are controversial, the results from the monkey studies have been instructive.

Early-life isolation sets off a flood of hormones that permanently warp their responses to stress, leaving them anxious and prone to violent swings in mood.

Isolation is so damaging because humans, especially as infants, literally depend on social stimulation to shape their minds, said psychologist John Cacioppo of the University of Chicago.

“Human social processes were once thought to have been incidental to learning and cognition,” Cacioppo wrote in an e-mail. “However, we now think that the complexities and demands of social species have contributed to the evolution of the brain and nervous system and to various aspects of cognition.”

Corfas and Makinodan’s team linked specific genetic changes to the abnormalities in their mice, and hope they might someday inform the development of drugs that can help reverse isolation’s effects.

A more immediate implication of the research is social. As evidence of neglect’s severe, long-term consequences accumulates, it could shape the way people think not just of orphanages, but policy matters like maternity and paternity leave, or the work requirements of single parents on welfare.

“What this work certainly says is that the first years of life are crucially important for brain architecture,” Fox said. “Infants and young children have to grow up in an environment of social relationships, and experiencing those is critical for healthy cognitive, social and psychological development. As a society, we should be figuring out how to encourage all that to happen.”

Thanks to Kebmobee for bringing this to the attention of the It’s Interesting community.

http://www.wired.com/wiredscience/2012/09/neuroscience-of-neglect/