A bioengineer and geneticist at Harvard’s Wyss Institute have successfully stored 5.5 petabits of data — around 700 terabytes — in a single gram of DNA, smashing the previous DNA data density record by a thousand times.

The work, carried out by George Church and Sri Kosuri, basically treats DNA as just another digital storage device. Instead of binary data being encoded as magnetic regions on a hard drive platter, strands of DNA that store 96 bits are synthesized, with each of the bases (TGAC) representing a binary value (T and G = 1, A and C = 0).

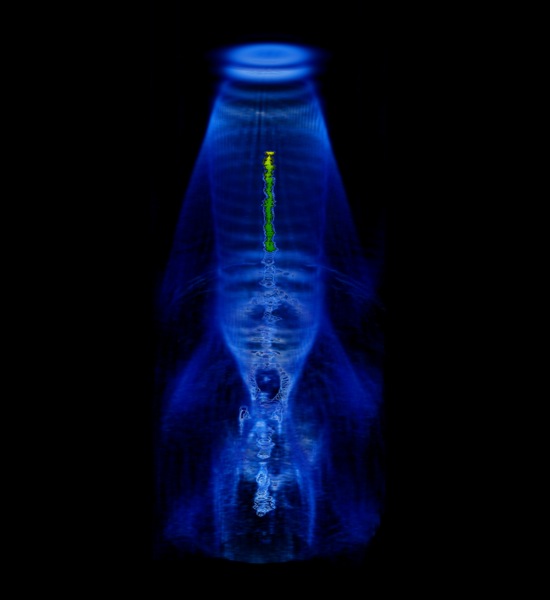

To read the data stored in DNA, you simply sequence it — just as if you were sequencing the human genome — and convert each of the TGAC bases back into binary. To aid with sequencing, each strand of DNA has a 19-bit address block at the start (the red bits in the image below) — so a whole vat of DNA can be sequenced out of order, and then sorted into usable data using the addresses.

Scientists have been eyeing up DNA as a potential storage medium for a long time, for three very good reasons: It’s incredibly dense (you can store one bit per base, and a base is only a few atoms large); it’s volumetric (beaker) rather than planar (hard disk); and it’s incredibly stable — where other bleeding-edge storage mediums need to be kept in sub-zero vacuums, DNA can survive for hundreds of thousands of years in a box in your garage.

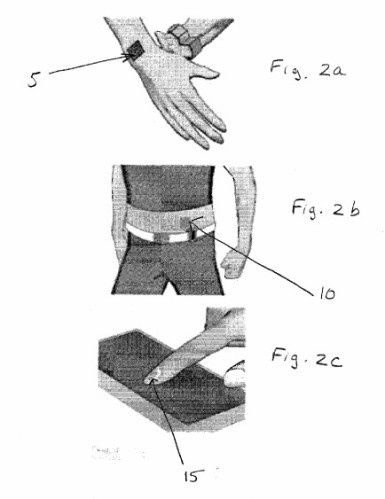

It is only with recent advances in microfluidics and labs-on-a-chip that synthesizing and sequencing DNA has become an everyday task, though. While it took years for the original Human Genome Project to analyze a single human genome (some 3 billion DNA base pairs), modern lab equipment with microfluidic chips can do it in hours. Now this isn’t to say that Church and Kosuri’s DNA storage is fast — but it’s fast enough for very-long-term archival.

Just think about it for a moment: One gram of DNA can store 700 terabytes of data. That’s 14,000 50-gigabyte Blu-ray discs… in a droplet of DNA that would fit on the tip of your pinky. To store the same kind of data on hard drives — the densest storage medium in use today — you’d need 233 3TB drives, weighing a total of 151 kilos. In Church and Kosuri’s case, they have successfully stored around 700 kilobytes of data in DNA — Church’s latest book, in fact — and proceeded to make 70 billion copies (which they claim, jokingly, makes it the best-selling book of all time!) totaling 44 petabytes of data stored.

Looking forward, they foresee a world where biological storage would allow us to record anything and everything without reservation. Today, we wouldn’t dream of blanketing every square meter of Earth with cameras, and recording every moment for all eternity/human posterity — we simply don’t have the storage capacity. There is a reason that backed up data is usually only kept for a few weeks or months — it just isn’t feasible to have warehouses full of hard drives, which could fail at any time. If the entirety of human knowledge — every book, uttered word, and funny cat video — can be stored in a few hundred kilos of DNA, it might just be possible to record everything.

http://refreshingnews99.blogspot.in/2012/08/harvard-cracks-dna-storage-crams-700.html

Thanks to kebmodee for bringing this to the attention of the It’s Interesting community.

![DNA-To-Store-Data-In-Future-Scientists-Were-Able-To-Convert-A-Book-Into-A-Strand-Of-Genetic-Code-[Scientist][2]](https://its-interesting.com/wp-content/uploads/2012/08/dna-to-store-data-in-future-scientists-were-able-to-convert-a-book-into-a-strand-of-genetic-code-scientist2.jpg?w=640)