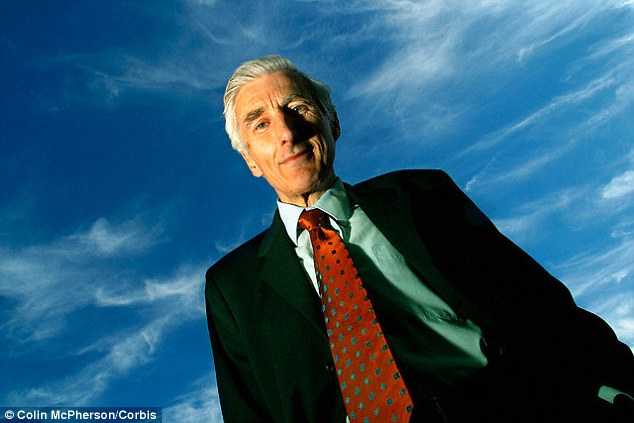

Elon Musk has said that there is only a “one in billions” chance that we’re not living in a computer simulation.

Our lives are almost certainly being conducted within an artificial world powered by AI and highly-powered computers, like in The Matrix, the Tesla and SpaceX CEO suggested at a tech conference in California.

Mr Musk, who has donated huge amounts of money to research into the dangers of artificial intelligence, said that he hopes his prediction is true because otherwise it means the world will end.

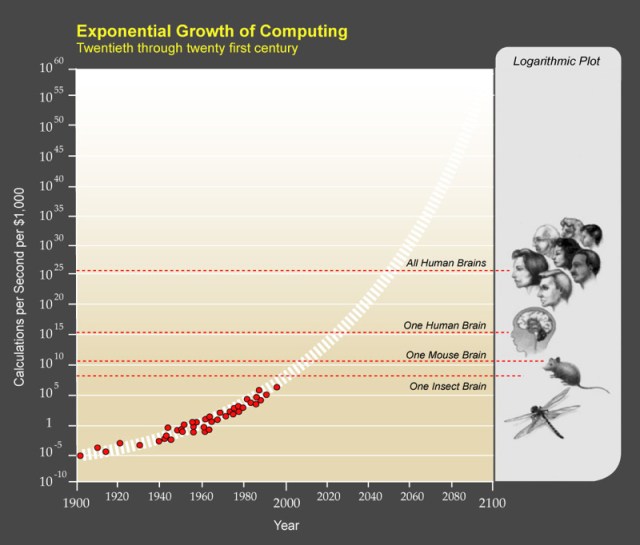

“The strongest argument for us probably being in a simulation I think is the following,” he told the Code Conference. “40 years ago we had Pong – two rectangles and a dot. That’s where we were.

“Now 40 years later we have photorealistic, 3D simulations with millions of people playing simultaneously and it’s getting better every year. And soon we’ll have virtual reality, we’ll have augmented reality.

“If you assume any rate of improvement at all, then the games will become indistinguishable from reality, just indistinguishable.”

He said that even if the speed of those advancements dropped by 1000, we would still be moving forward at an intense speed relative to the age of life.

Since that would lead to games that would be indistinguishable from reality that could be played anywhere, “it would seem to follow that the odds that we’re in ‘base reality’ is one in billions”, Mr Musk said.

Asked whether he was saying that the answer to the question of whether we are in a simulated computer game was “yes”, he said the answer is “probably”.

He said that arguably we should hope that it’s true that we live in a simulation. “Otherwise, if civilisation stops advancing, then that may be due to some calamitous event that stops civilisation.”

He said that either we will make simulations that we can’t tell apart from the real world, “or civilisation will cease to exist”.

Mr Musk said that he has had “so many simulation discussions it’s crazy”, and that it got to the point where “every conversation [he had] was the AI/simulation conversation”.

The question of whether what we see is real or simulated has perplexed humans since at least the Ancient philosophers. But it has been given a new and different edge in recent years with the development of powerful computers and artificial intelligence, which some have argued shows how easily such a simulation could be created.