By Laura Counts

Can’t stop checking your phone, even when you’re not expecting any important messages? Blame your brain.

A new study by researchers at UC Berkeley’s Haas School of Business has found that information acts on the brain’s dopamine-producing reward system in the same way as money or food.

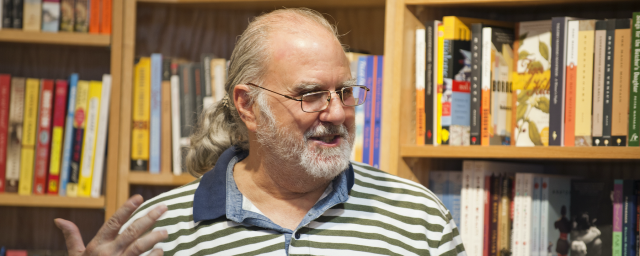

“To the brain, information is its own reward, above and beyond whether it’s useful,” says Assoc. Prof. Ming Hsu, a neuroeconomist whose research employs functional magnetic imaging (fMRI), psychological theory, economic modeling, and machine learning. “And just as our brains like empty calories from junk food, they can overvalue information that makes us feel good but may not be useful—what some may call idle curiosity.”

The paper, “Common neural code for reward and information value,” was published this month by the Proceedings of the National Academy of Sciences. Authored by Hsu and graduate student Kenji Kobayashi, now a post-doctoral researcher at the University of Pennsylvania, it demonstrates that the brain converts information into the same common scale as it does for money. It also lays the groundwork for unraveling the neuroscience behind how we consume information—and perhaps even digital addiction.

“We were able to demonstrate for the first time the existence of a common neural code for information and money, which opens the door to a number of exciting questions about how people consume, and sometimes over-consume, information,” Hsu says.

Rooted in the study of curiosity

The paper is rooted in the study of curiosity and what it looks like inside the brain. While economists have tended to view curiosity as a means to an end, valuable when it can help us get information to gain an edge in making decisions, psychologists have long seen curiosity as an innate motivation that can spur actions by itself. For example, sports fans might check the odds on a game even if they have no intention of ever betting.

Sometimes, we want to know something, just to know.

“Our study tried to answer two questions. First, can we reconcile the economic and psychological views of curiosity, or why do people seek information? Second, what does curiosity look like inside the brain?” Hsu says.

The neuroscience of curiosity

To understand more about the neuroscience of curiosity, the researchers scanned the brains of people while they played a gambling game. Each participant was presented with a series of lotteries and needed to decide how much they were willing to pay to find out more about the odds of winning. In some lotteries, the information was valuable—for example, when what seemed like a longshot was revealed to be a sure thing. In other cases, the information wasn’t worth much, such as when little was at stake.

For the most part, the study subjects made rational choices based on the economic value of the information (how much money it could help them win). But that didn’t explain all their choices: People tended to over-value information in general, and particularly in higher-valued lotteries. It appeared that the higher stakes increased people’s curiosity in the information, even when the information had no effect on their decisions whether to play.

The researchers determined that this behavior could only be explained by a model that captured both economic and psychological motives for seeking information. People acquired information based not only on its actual benefit, but also on the anticipation of its benefit, whether or not it had use.

Hsu says that’s akin to wanting to know whether we received a great job offer, even if we have no intention of taking it. “Anticipation serves to amplify how good or bad something seems, and the anticipation of a more pleasurable reward makes the information appear even more valuable,” he says.

Common neural code for information and money

How does the brain respond to information? Analyzing the fMRI scans, the researchers found that the information about the games’ odds activated the regions of the brain specifically known to be involved in valuation (the striatum and ventromedial prefrontal cortex or VMPFC), which are the same dopamine-producing reward areas activated by food, money, and many drugs. This was the case whether the information was useful, and changed the person’s original decision, or not.

Next, the researchers were able to determine that the brain uses the same neural code for information about the lottery odds as it does for money by using a machine learning technique (called support vector regression). That allowed them to look at the neural code for how the brain responds to varying amounts of money, and then ask if the same code can be used to predict how much a person will pay for information. It can.

In other words, just as we can convert such disparate things as a painting, a steak dinner, and a vacation into a dollar value, the brain converts curiosity about information into the same common code it uses for concrete rewards like money, Hsu says.

“We can look into the brain and tell how much someone wants a piece of information, and then translate that brain activity into monetary amounts,” he says.

Raising questions about digital addiction

While the research does not directly address overconsumption of digital information, the fact that information engages the brain’s reward system is a necessary condition for the addiction cycle, he says. And it explains why we find those alerts saying we’ve been tagged in a photo so irresistible.

“The way our brains respond to the anticipation of a pleasurable reward is an important reason why people are susceptible to clickbait,” he says. “Just like junk food, this might be a situation where previously adaptive mechanisms get exploited now that we have unprecedented access to novel curiosities.”

How information is like snacks, money, and drugs—to your brain