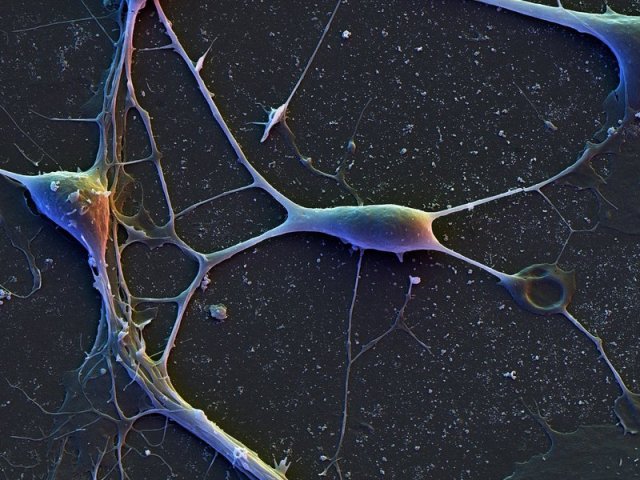

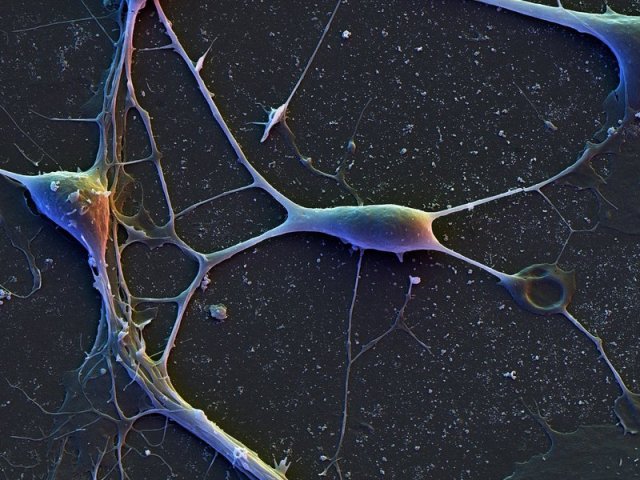

Human cortical neurons in the brain. (David Scharf/Corbis)

By Jerry Adler

Smithsonian Magazine

Ken Hayworth, a neuroscientist, wants to be around in 100 years but recognizes that, at 43, he’s not likely to make it on his own. Nor does he expect to get there preserved in alcohol or a freezer; despite the claims made by advocates of cryonics, he says, the ability to revivify a frozen body “isn’t really on the horizon.” So Hayworth is hoping for what he considers the next best thing. He wishes to upload his mind—his memories, skills and personality—to a computer that can be programmed to emulate the processes of his brain, making him, or a simulacrum, effectively immortal (as long as someone keeps the power on).

Hayworth’s dream, which he is pursuing as president of the Brain Preservation Foundation, is one version of the “technological singularity.” It envisions a future of “substrate-independent minds,” in which human and machine consciousness will merge, transcending biological limits of time, space and memory. “This new substrate won’t be dependent on an oxygen atmosphere,” says Randal Koene, who works on the same problem at his organization, Carboncopies.org. “It can go on a journey of 1,000 years, it can process more information at a higher speed, it can see in the X-ray spectrum if we build it that way.” Whether Hayworth or Koene will live to see this is an open question. Their most optimistic scenarios call for at least 50 years, and uncounted billions of dollars, to implement their goal. Meanwhile, Hayworth hopes to achieve the ability to preserve an entire human brain at death—through chemicals, cryonics or both—to keep the structure intact with enough detail that it can, at some future time, be scanned into a database and emulated on a computer.

That approach presumes, of course, that all of the subtleties of a human mind and memory are contained in its anatomical structure—conventional wisdom among neuroscientists, but it’s still a hypothesis. There are electrochemical processes at work. Are they captured by a static map of cells and synapses? We won’t know, advocates argue, until we try to do it.

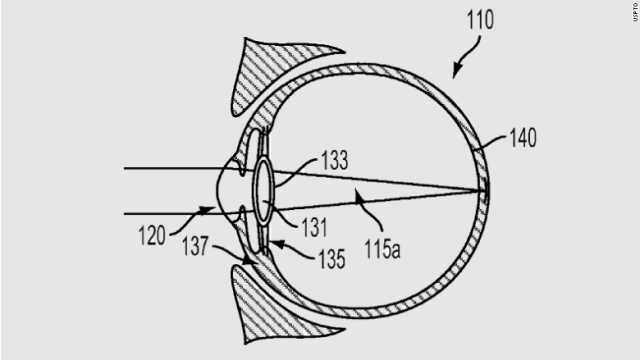

The initiatives require a big bet on the future of technology. A 3-D map of all the cells and synapses in a nervous system is called a “connectome,” and so far researchers have produced exactly one, for a roundworm called Caenorhabditis elegans, with 302 neurons and about 7,000 connections among them. A human brain, according to one reasonable estimate, has about 86 billion neurons and 100 trillion synapses. And then there’s the electrochemical activity on top of that. In 2013, announcing a federal initiative to produce a complete model of the human brain, Francis Collins, head of the National Institutes of Health, said it could generate “yottabytes” of data—a million million million megabytes. To scan an entire human brain at the scale Hayworth thinks is necessary—effectively slicing it into virtual cubes ten nanometers on a side—would require, with today’s technology, “a million electron microscopes running in parallel for ten years.” Mainstream researchers are divided between those who regard Hayworth’s quest as impossible in practice, and those, like Miguel Nicolelis of Duke University, who consider it impossible in theory. “The brain,” he says, “is not computable.”

And what does it mean for a mind to exist outside a brain? One immediately thinks of the disembodied HAL in 2001: A Space Odyssey. But Koene sees no reason that, if computers continue to grow smaller and more powerful, an uploaded mind couldn’t have a body—a virtual one, or a robotic one. Will it sleep? Experience hunger, pain, desire? In the absence of hormones and chemical neurotransmitters, will it feel emotion? It will be you, in a sense, but will you be it?

These questions don’t trouble Hayworth. To him, the brain is the most sophisticated computer on earth, but only that, and he figures his mind could also live in one made of transistors instead. He hopes to become the first human being to live entirely in cyberspace, to send his virtual self into the far future.

Read more: http://www.smithsonianmag.com/innovation/quest-upload-mind-into-digital-space-180954946/#OBRGToqVzeqftrBt.99