Eugene Shoemaker

Carolyn Shoemaker

Carolyn and Eugene Shoemaker stand by the 18″ Schmidt Telescope at the Palomar Observatory. They used it to search for asteroids and comets that may come close to the earth’s orbit.

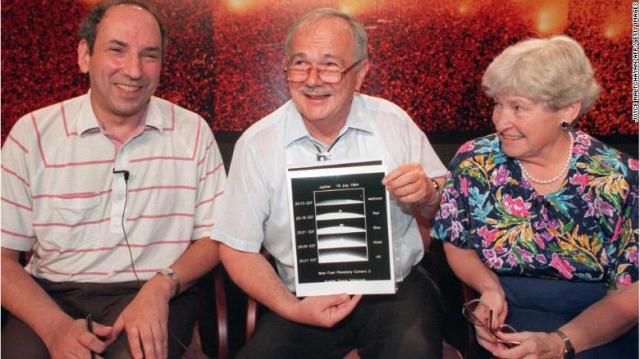

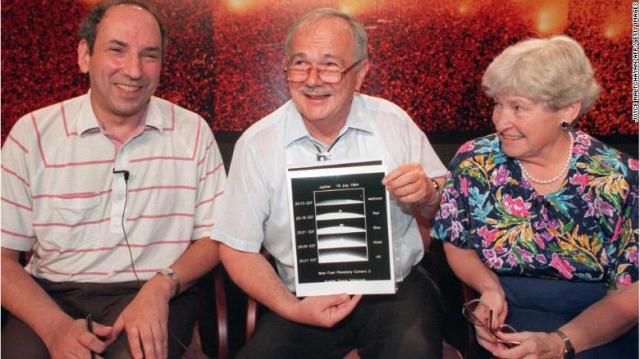

Scientist Eugene Shoemaker (C) pictured on July, 17, 1994 in Greenbelt, Maryland, with a series of images of the Shoemaker-Levy 9 comet impact with Jupiter. At right is his wife Carolyn and at left is David Levy.

Today, we know Neil Armstrong and Buzz Aldrin as the first men to land on the moon, 51 years ago. But, if not for a turn of events, history may have also known another name: Eugene Shoemaker.

Thirty years after that one small step for mankind, Eugene would make his own, extraordinary journey to the moon.

Chapter 1: Boy meets girl

In the summer of 1950, Carolyn Spellmann was a college student living in Chico, California. It was there where she would first meet her future husband and science partner, Eugene Shoemaker.

“He came to be my brother’s best man at his wedding,” Carolyn recalled. “He came there, and I opened the back door, and there was Gene.”

That first meeting turned into a long-distance pen pal relationship, and a year later, they were married.

Chapter 2: Reaching for the stars

It was Gene who would encourage Carolyn to step behind a telescope, sparking a lifelong passion and profession.

“Gene simply said, ‘Maybe I would like to see things through the telescope,'” Carolyn remembered. “I thought, ‘No, I’ve never stayed awake a night in my life, I don’t think so.’ But I gradually fell into the program, into the work.”

Carolyn went on to become a celebrated astronomer, and even held the Guinness World Record for the greatest number of comets discovered by an individual. “That earned me the nickname of Mrs. Comet,” said Carolyn.

While Carolyn focused her research on comets and near-Earth asteroids, her husband was interested in the things that asteroids created — craters.

“He always thought big, and so the origin of the universe was his project,” Carolyn said. “The more we found that had craters on them, the more excited he was.”

Chapter 3: Shooting for the moon

But for Eugene, the moon was always the ultimate goal.

“Gene wanted to go to the moon more than anything since he was a very young man,” Carolyn said. “Gene felt that putting a man on the moon was a step in science … He felt that we had a lot to learn about the origin of the moon, and therefore, other planets.”

So, in 1961, when President John F. Kennedy announced that the United States would be sending a man to the moon before the end of the decade, Eugene’s life changed forever. As a geologist dedicated to studying craters, he wanted the chance to stand on the moon, study its surface with his own two hands.

“Gene thought that he was going to the moon,” Carolyn said. “He wanted to, he worked very hard toward that end. Gene was terribly excited and worried, too, because he felt it was too soon. Too soon, he wasn’t prepared and ready, yet, he was still learning lots of things that he would need to know.”

Chapter 4: A dream deferred

But, it wasn’t his time. A failed medical test stopped his dreams in their tracks.

“It was discovered that he had Addison’s disease, which is a failure of the adrenal glands,” Carolyn recalled.

“That meant that there was no prospect at all of his ever going to the moon.”

Carolyn said Eugene “felt like his goal had suddenly disappeared.”

“At the same time, he was not a quitter,” she added.

Eugene continued to work to bring qualified people into the astronaut training program.

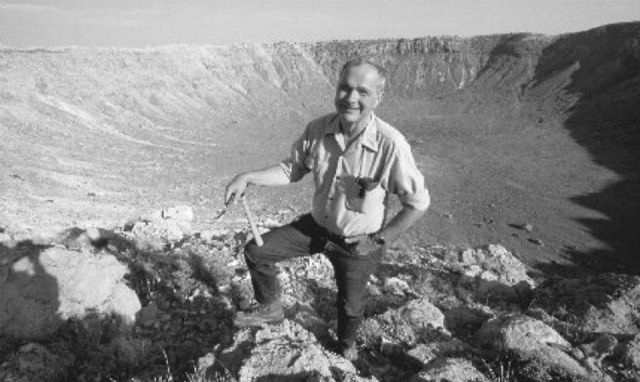

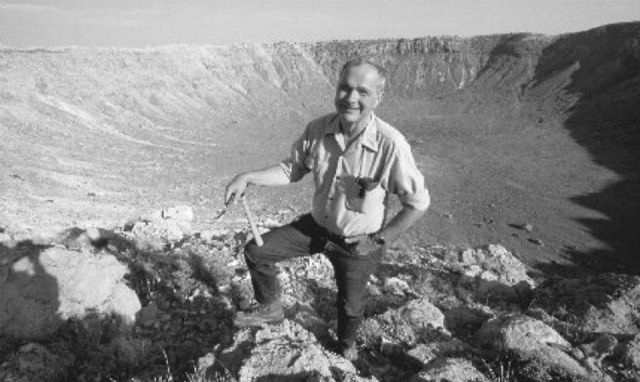

“He helped train Neil Armstrong, he helped train many of the astronauts,” Carolyn said. “He took the first group, and then several other groups to Meteor Crater (in Arizona).”

Meteor Crater was used as a training ground for astronauts because it mimicked the surface of the moon, both being dotted with meteor-impact craters.

Chapter 5: Turning their attention

While Eugene tucked away his hope of going to the moon, he and Carolyn set up an observation program at Palomar Observatory in California, looking to uncover near-Earth objects. That led them to one of their greatest discoveries — Comet Shoemaker-Levy 9, the comet that collided with Jupiter. It was the first time in history humans had observed a collision between two bodies in the solar system.

“He let the dream of going to the moon himself go, he was realistic about it,” Carolyn said. “At the same time, it was still on his mind. When we would do our observing program, he would be looking at the moon with that in mind, I’m sure.”

Eventually, Carolyn and Eugene would put space behind them and turn their attention to their own backyard.

“Our focus changed over the years from looking up at the moon and looking at the sky only, to considering what would happen on Earth,” Carolyn said. “Gene had a dream of seeing an asteroid hit the Earth.”

Their search for impact craters took them all over the world, with a special focus on Australia.

“The trips to Australia were rather special,” Carolyn said. “We went to Australia because it had the oldest land surface available to study.”

“We were living out of our truck … We were able to camp out under the stars, which was really special because their sky was just magnificent, and it was different from ours. It was upside down.”

Chapter 6: A fateful day

On July 18, 1997, Eugene and Carolyn were driving to meet a friend who would help them with some crater-mapping.

“We were just looking off in the distance, talking about how much fun we were having, what we were going to do,” Carolyn remembers. “Then suddenly, there appeared a Land Rover in front of us, and that was it.”

The two vehicles collided, and Eugene died.

“I had been hurt and I thought to myself, ‘Well, Gene will come around like he always does and rescue me,'” Carolyn recalls. “So I waited, and I called, and nothing happened.”

Chapter 7: Getting the call

While Carolyn was recovering in the hospital, she received a call from Carolyn Porco — an ex-student of Eugene’s who had been working on the Lunar Prospector space probe mission with NASA.

“She said, ‘I’m here in Palo Alto with some people who are working on Lunar Prospector,'” Carolyn remembers.

“They’re about to send a mission up to the moon, I wonder if you would like to put Gene’s ashes on the moon?”

”

I said, ‘Yes … I think that would be wonderful.'”

On January 6, 1998, the Lunar Prospector was sent off, carrying Eugene’s ashes onboard. “The whole family was there to wave Gene goodbye,” Carolyn said.

Chapter 8: A telling passage

Along with the space probe, an epigraph, laser-etched onto a piece of brass foil, was sent up with Eugene’s remains. It included a passage from Shakespeare’s “Romeo and Juliet.”

“And, when he shall die,

Take him and cut him out in little stars,

And he will make the face of heaven so fine

That all the world will be in love with night,

And pay no worship to the garish sun.”

After the Prospector’s mission was completed, it ran out of fuel and crashed into the side of the moon, by the South Pole. The impact created its own crater, and that’s where Eugene’s ashes remain today.

“Gene spent most of his life thinking about craters, about the moon,” Carolyn said. “It was ironic that he ended his life also with the moon … but he would have been very pleased to know that happened.”

Epilogue

A few years prior to his death, while receiving the William Bowie Medal for his contributions to geophysics, Eugene noted that “not going to the moon and banging on it with my own hammer has been my biggest disappointment in life”

“But then, I probably wouldn’t have gone to Palomar Observatory to take some 25,000 films of the night sky with Carolyn,” he continued. “We wouldn’t have had the thrills of finding those funny things that go bump in the night.”

Carolyn misses him always. To this day, she’ll look up to the moon and imagine him there with his rocks — looking down.

To hear her say it, he still lights up every single one of her night skies.

https://www.cnn.com/2020/07/26/us/man-on-the-moon-ashes-scn-trnd/index.html?utm_term=159628334818014c51a639e8f&utm_source=The+Good+Stuff+08%2F01%2F20&utm_medium=email&utm_campaign=228899_1596283348182&bt_ee=1Cu9PD%2F%2FgVrCcw92d%2FAaZbpmmIocfY3gomZAPHzMl1dqSKup05CAB5fzEw%2FWW0gZ&bt_ts=1596283348182